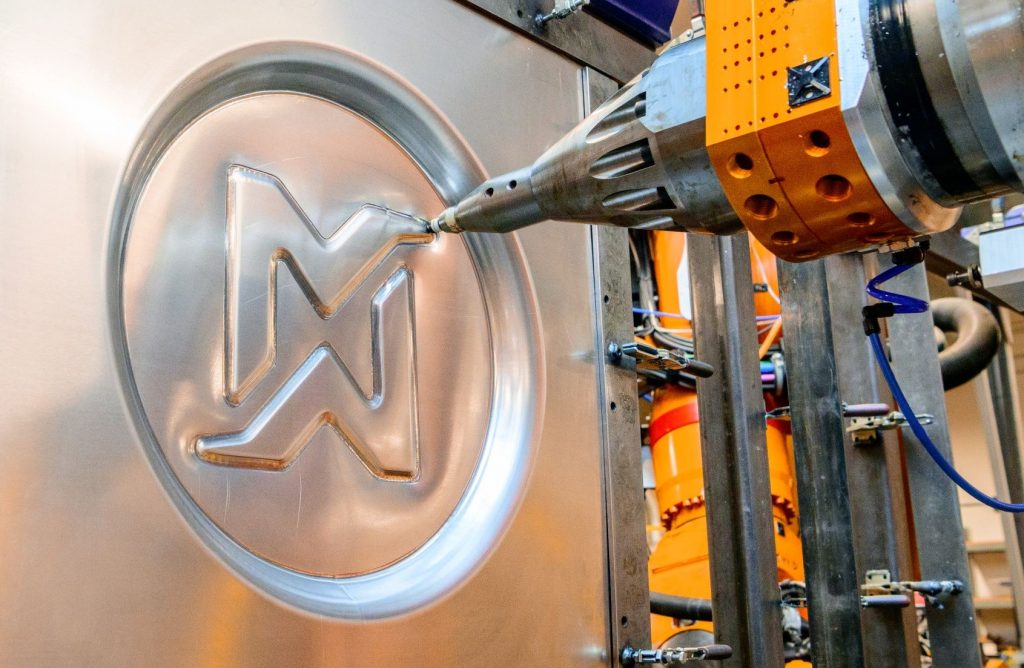

Machina Labs uses a novel method of sheet metal forming with two robotic arms, drastically reducing production time from months to hours. Co-founder and CEO Ed Mehr has an engineering background in smart manufacturing and artificial intelligence, previously leading the development of the world’s largest metal 3D printer at Relativity Space. He has also worked at SpaceX, Google, and Microsoft and was previously the CTO at Cloudwear (now Averon).

Machina Labs uses a novel method of sheet metal forming with two robotic arms, drastically reducing production time from months to hours. Co-founder and CEO Ed Mehr has an engineering background in smart manufacturing and artificial intelligence, previously leading the development of the world’s largest metal 3D printer at Relativity Space. He has also worked at SpaceX, Google, and Microsoft and was previously the CTO at Cloudwear (now Averon).

Prosthetic limbs are often associated with either nude-colored imitations of human limbs or high-tech models optimized for athletes. But what about a third category that captures the fun and imagination of children while still meeting functional requirements? That’s where Open Bionics comes in, founded by Joel Gibbard and Samantha Payne. Through partnerships with Disney and Lucasfilms, Open Bionics has created a line of cool and inspiring prosthetic limbs. Joel Gibbard, a robotics graduate from the University of Plymouth, UK, co-founded Open Bionics with the goal of revolutionizing the industry with accessible and advanced bionic arms. Ready to invest in their mission? Joel shares why you should be.

Prosthetic limbs are often associated with either nude-colored imitations of human limbs or high-tech models optimized for athletes. But what about a third category that captures the fun and imagination of children while still meeting functional requirements? That’s where Open Bionics comes in, founded by Joel Gibbard and Samantha Payne. Through partnerships with Disney and Lucasfilms, Open Bionics has created a line of cool and inspiring prosthetic limbs. Joel Gibbard, a robotics graduate from the University of Plymouth, UK, co-founded Open Bionics with the goal of revolutionizing the industry with accessible and advanced bionic arms. Ready to invest in their mission? Joel shares why you should be.

Did you know that the recycling industry in the US is a profitable venture? They take recyclable material, refine it, and sell it back to companies at a cheaper price than producing the material from scratch. But with ~300 million tons of trash generated annually in the US, up to 75% of which is recyclable, why aren’t we maximizing recycling efforts? Less than a third of the trash actually gets recycled. Co-founder of Glacier, Areeb, dives into the complex reasons behind this and shares how Glacier is tackling the issue head-on.

Did you know that the recycling industry in the US is a profitable venture? They take recyclable material, refine it, and sell it back to companies at a cheaper price than producing the material from scratch. But with ~300 million tons of trash generated annually in the US, up to 75% of which is recyclable, why aren’t we maximizing recycling efforts? Less than a third of the trash actually gets recycled. Co-founder of Glacier, Areeb, dives into the complex reasons behind this and shares how Glacier is tackling the issue head-on.

Benjamin Rosman, co-founder of Deep Learning Indaba, presented at ICRA 2022 on their mission to strengthen AI and ML communities across Africa. Based in South Africa, Deep Learning Indaba hosts annual meetups in different countries and encourages grassroots communities to run their own local events. Join the movement to boost AI and ML in Africa!

Benjamin Rosman, co-founder of Deep Learning Indaba, presented at ICRA 2022 on their mission to strengthen AI and ML communities across Africa. Based in South Africa, Deep Learning Indaba hosts annual meetups in different countries and encourages grassroots communities to run their own local events. Join the movement to boost AI and ML in Africa!

SoftBank Robotics aims to create value for humanity through robotics and AI technology. Their focus is on scalable industries with gaps in task-to-service value, such as the cleaning industry, which is dominated by a workforce. Whiz, an indoor, mobile, autonomous vacuum cleaner, is SoftBank Robotics’ product that best personifies their mission. By automating single tasks, the workforce can uplevel itself and evolve from a transformation perspective, while providing better and safer environments for the workforce. SoftBank Robotics provides a proof of performance and creates efficiency, allowing for a more frequent clean and creating less risk of an unsafe environment. This creates opportunities in industries like hospitality, senior living, and education.

SoftBank Robotics aims to create value for humanity through robotics and AI technology. Their focus is on scalable industries with gaps in task-to-service value, such as the cleaning industry, which is dominated by a workforce. Whiz, an indoor, mobile, autonomous vacuum cleaner, is SoftBank Robotics’ product that best personifies their mission. By automating single tasks, the workforce can uplevel itself and evolve from a transformation perspective, while providing better and safer environments for the workforce. SoftBank Robotics provides a proof of performance and creates efficiency, allowing for a more frequent clean and creating less risk of an unsafe environment. This creates opportunities in industries like hospitality, senior living, and education.

Luxonis’ Founder and CEO, Brandon Gilles, shares how his team created the versatile OAK-D perception platform. With expertise in embedded hardware and software, Gilles leveraged his experience from Ubiquiti, a company that transformed networking with network-on-a-chip architectures. The OAK-D, which uses Intel Myriad X for power-efficient perception computations, offers more than just depth sensing capabilities. It also provides access to open-source computer vision and AI packages pre-calibrated to the optics system. Thanks to its system-on-a-module design, Luxonis can easily produce various hardware platform variations to fit customers’ needs. Stay tuned for more updates.

Luxonis’ Founder and CEO, Brandon Gilles, shares how his team created the versatile OAK-D perception platform. With expertise in embedded hardware and software, Gilles leveraged his experience from Ubiquiti, a company that transformed networking with network-on-a-chip architectures. The OAK-D, which uses Intel Myriad X for power-efficient perception computations, offers more than just depth sensing capabilities. It also provides access to open-source computer vision and AI packages pre-calibrated to the optics system. Thanks to its system-on-a-module design, Luxonis can easily produce various hardware platform variations to fit customers’ needs. Stay tuned for more updates.

ApexAI is improving ROS2 for use in autonomous vehicles and bridging the gap between the automotive and robotics industries. They aim to shorten the current multi-year development process with their technology showcased at the Indy Autonomous Challenge. Apex.OS is a certified software framework and SDK that enables developers to create safe and certified autonomous driving applications compatible with ROS2.

ApexAI is improving ROS2 for use in autonomous vehicles and bridging the gap between the automotive and robotics industries. They aim to shorten the current multi-year development process with their technology showcased at the Indy Autonomous Challenge. Apex.OS is a certified software framework and SDK that enables developers to create safe and certified autonomous driving applications compatible with ROS2.

Davide Scaramuzza discusses Event Cameras, a step-change in camera innovation that has the potential to accelerate vision-based robotics applications. Event Cameras operate differently from traditional cameras, unlocking benefits such as extremely high-speed imaging, removal of the concept of “framerate”, and low power consumption.

Davide Scaramuzza discusses Event Cameras, a step-change in camera innovation that has the potential to accelerate vision-based robotics applications. Event Cameras operate differently from traditional cameras, unlocking benefits such as extremely high-speed imaging, removal of the concept of “framerate”, and low power consumption.

One of my favorite emerging technologies with a ton of promise for perception.

I spoke to Maxime Gariel, CTO of Xwing about the autonomous flight technology they are developing.

At Xwing, they are converting traditional aircraft into remotely operated aircraft. They do this by retrofitting planes with multiple sensors including cameras, radar, and lidar, and by developing sensor fusion algorithms to allow their planes to understand the world around them, using highly accurate perception algorithms.

Xwing’s autonomous flight technology allows a plane to taxi in the airport, takeoff, fly to a destination, avoid airborne and ground threats, and land, all without any human input. This technology not only enables autonomous flight but may also enhance the safety of manned aircraft by improving a plane’s ability to understand its surroundings.

The unique challenges of operating in autonomously in the air piqued my interest. Unlike with self driving cars, when you are in the sky there are no features you can use to estimate depth and scale using video from a single camera. With objects flying towards you at hundreds of miles an hour, you need to be able to make those measurements rapidly. To solve this, they developed a sensor fusion package that combines video, radar, and ADS-B.

Maxime Gariel

Maxime Gariel is the CTO of Xwing, a San Francisco based startup whose mission is to dramatically increase human mobility using fully autonomous aerial vehicles. Xwing is developing a Detect-And-Avoid system for unmanned and remotely piloted vehicles. Maxime is a pilot but he is passionate about making airplanes fly themselves.

Maxime joined Xwing from Rockwell Collins where he was a Principal GNC Engineer. He worked on autonomous aircraft projects including DARPA Gremlins and the AgustaWestland SW4 Solo autonomous helicopter. Before becoming Chief Engineer of the SW4 Solo’s flight control system, he was in charge of the system architecture, redundancy, and safety for the project.

Before Rockwell Collins, he worked on ADS-B based conflict detection as a postdoc at MIT and on autoland systems for airliners at Thales. Maxime earned his MS and PhD in Aerospace Engineering from Georgia Tech and his BS from ISAE-Supaéro (France).

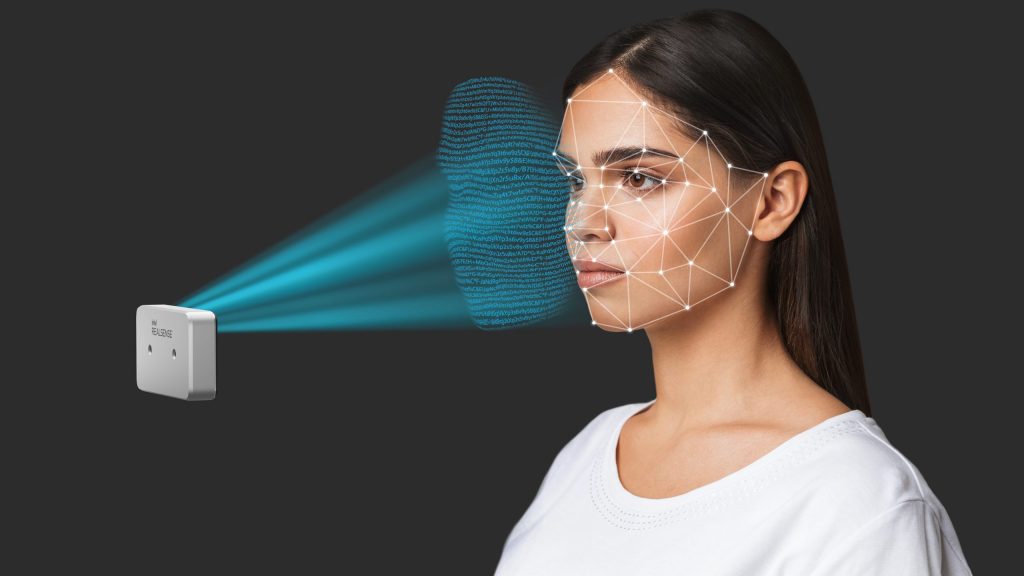

Intel RealSense is known in the robotics community for its plug-and-play stereo cameras. These cameras make gathering 3D depth data a seamless process, with easy integrations into ROS to simplify the software development for your robots. From the RealSense team, Joel Hagberg talks about how they built this product, which allows roboticists to perform computer vision and machine learning at the edge.

Through Fluid Design Consultancy, we have come into contact with the RealSense line of products extensively. The ability to add an off the shelf component to instantly give us depth data is a win by itself. The ability to leverage an active, open source community that develops software packages on top of this to extract more value out of the product is magical.

Joel Hagberg

Joel Hagberg leads the Intel® RealSense™ Marketing. Product Management and Customer Support teams. He joined Intel in 2018 after a few years as an Executive Advisor working with startups in the IoT, AI, Flash Array, and SaaS markets. Before his Executive Advisor role, Joel spent two years as Vice President of Product Line Management at Seagate Technology with responsibility for their $13B product portfolio. He joined Seagate from Toshiba, where Joel spent 4 years as Vice President of Marketing and Product Management for Toshiba’s HDD and SSD product lines. Joel joined Toshiba with Fujitsu’s Storage Business acquisition, where Joel spent 12 years as Vice President of Marketing, Product Management, and Business Development. Joel’s Business Development efforts at Fujitsu focused on building emerging market business units in Security, Biometric Sensors, H.264 HD Video Encoders, 10GbE chips, and Digital Signage.